Simulations: How AI agents get tested and trusted

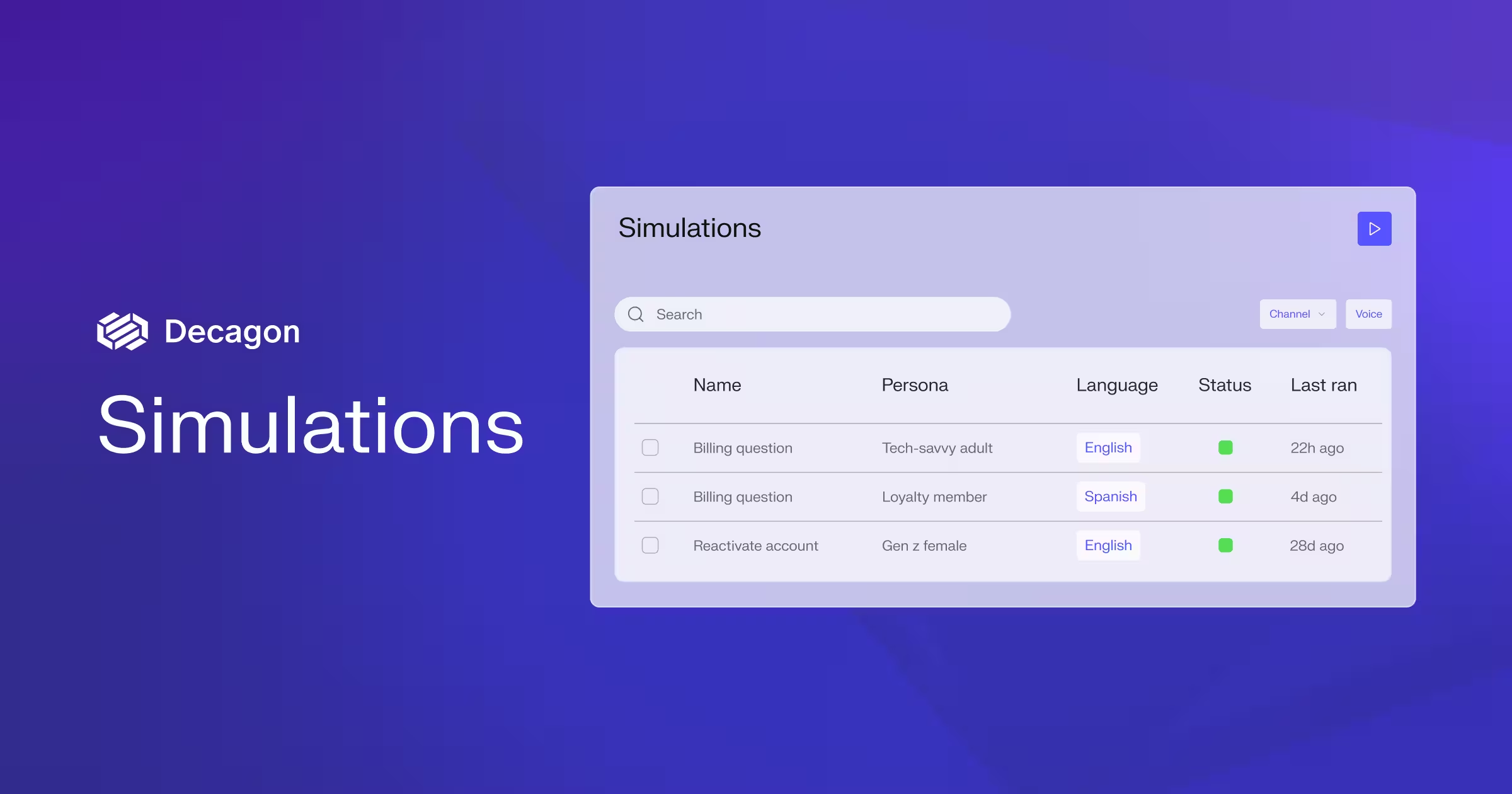

Introducing Simulations, a new way to test your AI agents like real users would, across channels, intents, and edge cases.

AI agents don’t behave like traditional software. The same input can yield different outputs depending on context. That makes it hard to trust what your agent will do unless you test it across a wide range of user intents and scenarios.

Simulations bring a new approach to testing, built around your agent’s core logic: Agent Operating Procedures (AOPs). It uses AI to generate conversations from mock users across different channels to thoroughly test your Decagon agent. You define what success looks like and Simulations tells you if the AI agent got there. This is how teams move from “it works most of the time” to “we know it works”.

Test AI logic, knowledge, and guardrails

To trust your AI agent in production, you need to pressure-test its behavior across three core areas:

- AOP selection and execution. Does the agent pick the right workflow? Does it follow the steps as intended? These tests verify that the business logic inside your AOPs is being adhered to.

- Knowledge base retrieval. Can the agent pull the right answer from your help center or internal docs? Can it respond clearly and accurately even when questions are phrased in unexpected ways?

- Guardrails and brand tone. Does your agent handle sensitive topics, escalate to humans, or stay on-brand with frustrated users?

Each batch of simulations runs conversations with a mock persona, tests against expected behavior, and provides a pass/fail result with full traceability. In one enterprise deployment, Simulations surfaced logic gaps and edge cases, allowing us to strengthen AOPs before QA even began.

As a best practice, teams run simulations before go-live, after launch, and before any major update to AOPs or knowledge. That way, regressions are caught before they ever reach customers.

Cover real scenarios, not hypotheticals

Another source of what to test is to look at what has worked or failed in the past.

Upload historical transcripts from conversations where AI or human agents struggled and turn them into grounded test cases. Decagon autogenerates personas and intents based on actual failure modes so your suite reflects real-world scenarios. You can define metadata, tool outputs, and conversation length, then run simulations multiple times to ensure consistency at scale.

This turns testing from a guessing game into a repeatable, reality-based process.

Voice needs its own playbook

Voice is where complexity and edge cases thrive. Timing, tone, and clarity all shape the experience. Transcription errors, latency between turns, and subtle emotional cues can turn a helpful answer into a frustrating exchange.

Simulations help teams test how their agent behaves under real-world voice conditions, modeling conditions such as:

- Accents

- Speaking speed

- Background noise and interruptions

- Emotional tone like anger, stress, or frustration

These are the messy, human moments that trip up AI agents in production. With Simulations, you can catch them early and ship a voice agent that feels fluent, natural, and trustworthy.

A new layer in your AI stack

Simulations isn’t a bolt-on QA tool. It’s foundational to how AI agents are built, optimized, and trusted.

Static tests still have value, especially for validating specific fixes, but they often fall out of date as your agent evolves. Dynamic tests with simulations are intent-driven and built to scale with your agent over time. And because they’re built around AOPs, they show both what failed and why.

With Trace View, you can inspect the AI agent’s decision path and debug issues before QA ever gets involved. Instead of reacting to broken experiences in production, teams can proactively improve agent behavior during development.

Close the loop with faster testing

Simulations power a flywheel of continuous improvement across Decagon’s platform. Run batches of simulations at scale, then create a Watchtower to automate QA of test runs. Update your AOPs or knowledge base to address the issues and rerun simulations to validate fixes. With each cycle, your agent gets sharper, more reliable, and easier to trust in production.

If you're already a Decagon customer, contact your Agent Product Manager for a guided tour and recommended test cases for your top intents. If you're not yet a customer, get a demo to see how Simulations, AOPs, and Watchtower work together to make trustworthy AI agents.

Simulations: How AI agents get tested and trusted

September 23, 2025

AI agents don’t behave like traditional software. The same input can yield different outputs depending on context. That makes it hard to trust what your agent will do unless you test it across a wide range of user intents and scenarios.

Simulations bring a new approach to testing, built around your agent’s core logic: Agent Operating Procedures (AOPs). It uses AI to generate conversations from mock users across different channels to thoroughly test your Decagon agent. You define what success looks like and Simulations tells you if the AI agent got there. This is how teams move from “it works most of the time” to “we know it works”.

Test AI logic, knowledge, and guardrails

To trust your AI agent in production, you need to pressure-test its behavior across three core areas:

- AOP selection and execution. Does the agent pick the right workflow? Does it follow the steps as intended? These tests verify that the business logic inside your AOPs is being adhered to.

- Knowledge base retrieval. Can the agent pull the right answer from your help center or internal docs? Can it respond clearly and accurately even when questions are phrased in unexpected ways?

- Guardrails and brand tone. Does your agent handle sensitive topics, escalate to humans, or stay on-brand with frustrated users?

Each batch of simulations runs conversations with a mock persona, tests against expected behavior, and provides a pass/fail result with full traceability. In one enterprise deployment, Simulations surfaced logic gaps and edge cases, allowing us to strengthen AOPs before QA even began.

As a best practice, teams run simulations before go-live, after launch, and before any major update to AOPs or knowledge. That way, regressions are caught before they ever reach customers.

Cover real scenarios, not hypotheticals

Another source of what to test is to look at what has worked or failed in the past.

Upload historical transcripts from conversations where AI or human agents struggled and turn them into grounded test cases. Decagon autogenerates personas and intents based on actual failure modes so your suite reflects real-world scenarios. You can define metadata, tool outputs, and conversation length, then run simulations multiple times to ensure consistency at scale.

This turns testing from a guessing game into a repeatable, reality-based process.

Voice needs its own playbook

Voice is where complexity and edge cases thrive. Timing, tone, and clarity all shape the experience. Transcription errors, latency between turns, and subtle emotional cues can turn a helpful answer into a frustrating exchange.

Simulations help teams test how their agent behaves under real-world voice conditions, modeling conditions such as:

- Accents

- Speaking speed

- Background noise and interruptions

- Emotional tone like anger, stress, or frustration

These are the messy, human moments that trip up AI agents in production. With Simulations, you can catch them early and ship a voice agent that feels fluent, natural, and trustworthy.

A new layer in your AI stack

Simulations isn’t a bolt-on QA tool. It’s foundational to how AI agents are built, optimized, and trusted.

Static tests still have value, especially for validating specific fixes, but they often fall out of date as your agent evolves. Dynamic tests with simulations are intent-driven and built to scale with your agent over time. And because they’re built around AOPs, they show both what failed and why.

With Trace View, you can inspect the AI agent’s decision path and debug issues before QA ever gets involved. Instead of reacting to broken experiences in production, teams can proactively improve agent behavior during development.

Close the loop with faster testing

Simulations power a flywheel of continuous improvement across Decagon’s platform. Run batches of simulations at scale, then create a Watchtower to automate QA of test runs. Update your AOPs or knowledge base to address the issues and rerun simulations to validate fixes. With each cycle, your agent gets sharper, more reliable, and easier to trust in production.

If you're already a Decagon customer, contact your Agent Product Manager for a guided tour and recommended test cases for your top intents. If you're not yet a customer, get a demo to see how Simulations, AOPs, and Watchtower work together to make trustworthy AI agents.